Applications & Show Cases

Automation is making strong inroads into the mobile machine market. In the automotive sector, intensive research is being conducted into safe automation and autonomous driving, while in the mobile machinery sector the scope is wider, and includes the automation of the work processes.

In order to move autonomously through road traffic, in the field, on construction sites and so on, an automated working machine must have various fundamental capabilities: it must perceive the environment, draw the right conclusions from the information obtained, and be able to act accordingly. Examples of applications, depending on the scenario, include the detection and identification of objects and obstacles, path planning and navigation, the calculation of complex machine models, the management of orders and missions and much more.

To be properly address these use cases, new technologies are constantly being incorporated into the architecture of mobile machines. To identify these technologies, we conduct intensive research, through various projects, in cooperation with other technology service providers and machine OEMs. In this context, we are concentrating on the further development of our electronic control and computing systems. Our new products represent a generic and scalable modular product family, and so, we can provide your highly automated applications with STW & ECO system partner products, tailored to your needs when necessary. In considering the needs of your vehicle or machine, we always consider the overall architecture.

Demonstrator with a high-performance controller from Sensor-Technik Wiedemann

At Agritechnica 2023, Sensor-Technik Wiedemann (STW) demonstrated the future of automation in agriculture and forestry with its Future-Tech setup. At the center of this innovative display was the new High Performance Controller (HPX), which lays the foundation for energy-efficient, resource-saving, highly automated and autonomous applications for mobile machinery. Thanks to the use of accelerated chipsets, the HPX enables fast data processing, which is essential for AI-based object recognition, precise image processing and the control of a wide and complex range of actuators. All essential elements of increasing the effectiveness of agricultural operations.

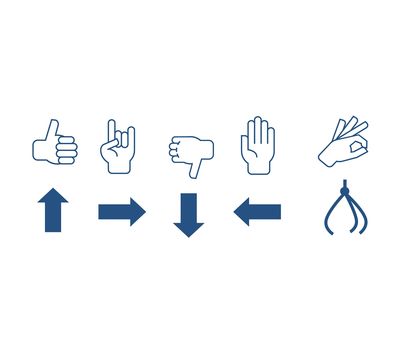

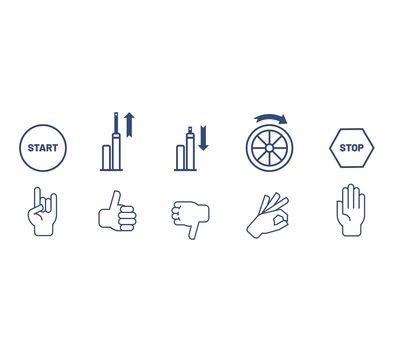

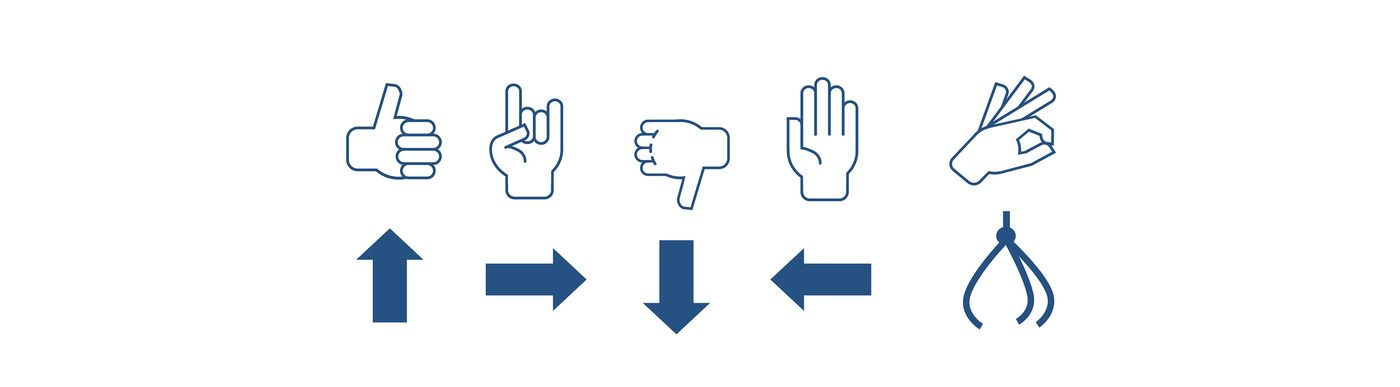

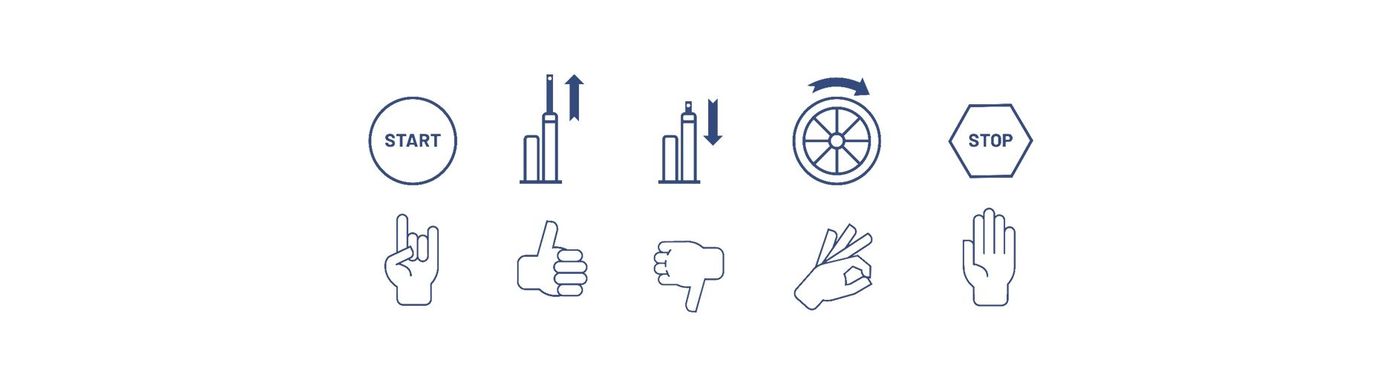

An impressive example of the potential of the HPX was an automatic gripper arm equipped with advanced gesture recognition. This machine was controlled by the HPX and a specially modified artificial intelligence (AI). To realize this advanced control system, a specific application logic was developed based on extensive programming knowledge and the application AI to real world problems. This enabled smooth and efficient operation of the gripper arm. Validation of the demonstrator technology took place in close cooperation with various experts, who tested the system intensively to both check its performance, and to optimize the functionality and practicality of the AI algorithms. The successful implementation of AI in this project illustrates the enormous potential of neural networks and machine learning in automation technology. The neural network, trained with an extensive data set of hand gesture images, was able to recognize complex patterns and correlations. As a result, it could identify and interpret different gestures, enabling intuitive control of the gripper arm.

Our aim is to operate neural networks in an energy-efficient manner on STW hardware. A user-friendly toolchain enables the rapid implementation of standard AI frameworks such as Caffe, PyTorch and TensorFlow as well as the efficient integration of custom AI layers using optimization and quantization tools.

STW offers a powerful combination of sensor technology, control and data processing. This includes, for example, the inertial measurement unit IGS from the SMX family, the ESX controller family and the TCG communication modules, as well as the openSYDE software suite, and the ArkVision Cam from our partner ecosystem. As a result of many years of research and development, these components work together to expand the possibilities in agriculture and forestry and support the rapid market launch of innovative machines and functions.

AI-Vision Demo

In the future, the STW modular system will also support applications based on artificial intelligence. In initial prototype projects, we are transferring software innovations from other industries - such as automotive - into the world of mobile machinery, together with the STW ECO system partners.

With the help of the prototypes, we are gathering experience on how best to further develop our hardware control systems for future AI applications. The goal is to ensure that with appropriate acceleration chipsets on STW hardware, neural networks can be utilized in an energy-efficient, resource saving manner.

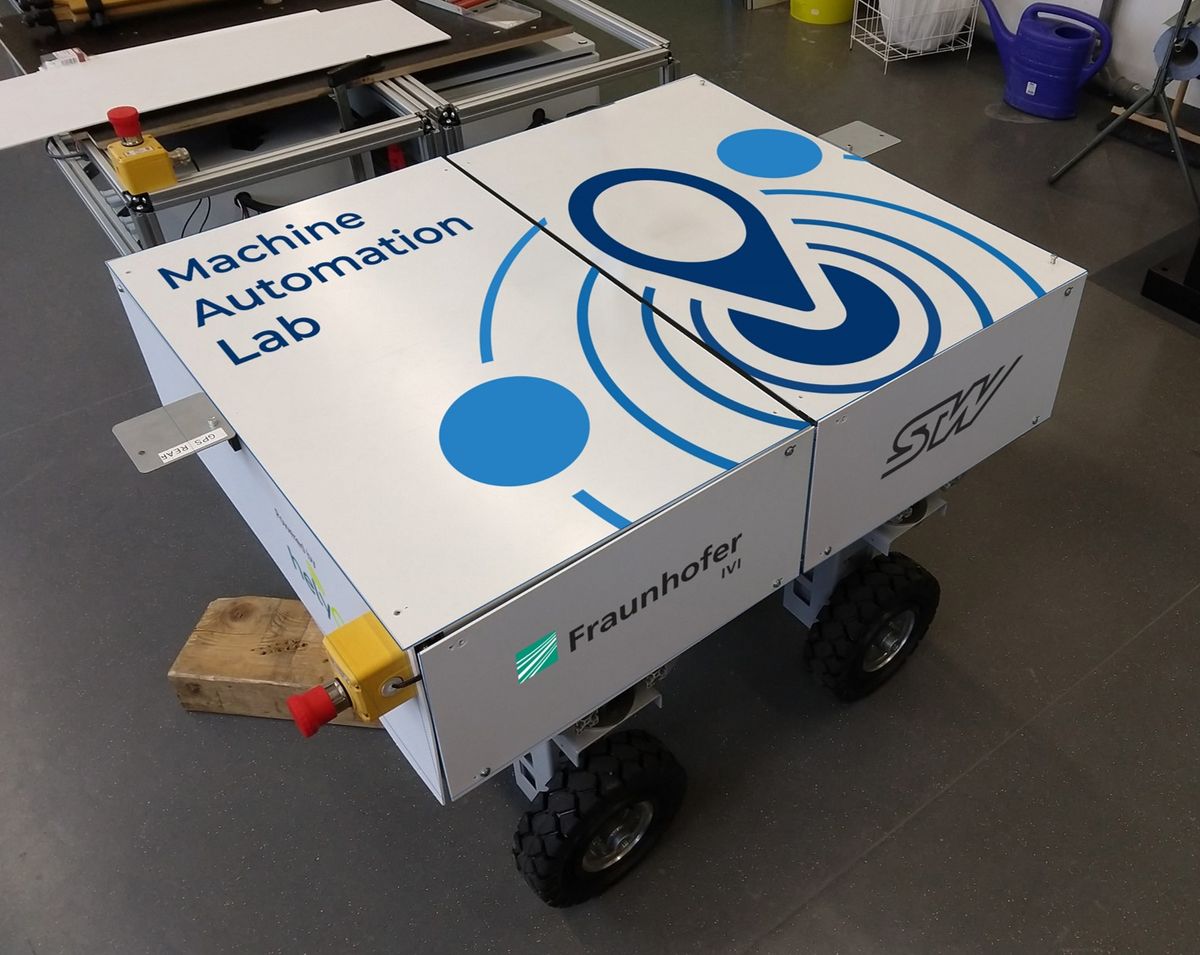

STWiesel

The STWiesel is a robotic vehicle developed as a technology and system building block demonstrator. We have instilled the STWiesel with various capabilities, for example autonomous indoor pick and place tasks. In this case, the STWiesel detects pre-defined objects, recognition of which has been trained using deep learning techniques. These objects can be approached using the environment sensors, autonomous path planning and navigation, and picked up by the front magnet. STWiesel scans the continuously during navigation so that the vehicle can avoid any obstacles, including those that occur unexpectedly. After picking up an object, the vehicle drives to the destination and deposits the object there.

The STWiesel is the result of a needs analysis and answers the question "Which functions and solutions do mobile machine manufacturers need in relation to autonomous working and driving?". The functions have been realized with STW products and selected 3rd party products. Connectivity is provided by means of the TCG-4 communication module, and the SMX.igs-e sensor serves as an inertial measurement unit (IMU), which improves the accuracy of the Simultaneous Localization And Mapping (SLAM) process. Sensors from the M01 and T01 family are used for interaction as well as for the detection of the current climate conditions.

In addition to the lidar and a ToF camera, a switchable stereo camera consisting of two separate RGB cameras is used for environment detection. The signals are processed by our high performance platform HPX in conjunction with the ESX.4cs-gw mobile control unit. The software modules are implemented and programmed with the STW Toolset openSYDE, enhanced with selected Robot Operating System (ROS) functions. With these technologies, the STWiesel convincingly demonstrates autonomous driving and working.

ROVO AI

The ROVO AI is an application-neutral and emission-free technology demonstrator based on a tracked vehicle robotics platform. The vehicle can be adapted for just about any conceivable use - without compromising on autonomous operation.

In this partner project, we combine our automation expertise with HAWE's hydraulics know-how and vehicle platform and Robot Makers' software and system integration knowledge. Whether it’s for snow clearing, street sweeping, mowing, or field spraying - the ROVO AI serves as a basis for any of these applications.

Machine manufacturers benefit from a robust, modular and flexible platform that can be readily adapted to their own needs. This means more sustainable production with fewer variants, as only the attachment and the software need to be adapted to the application. At the end of the project, it should be possible to link all the necessary components via plug and play. The design of the system architecture on this basis is exceptionally user-friendly.

The ROVO AI underlines the importance of cooperative partnership and helps pave the way to a fully autonomous future for mobile machines.

MaliBots

In order to demonstrate the possibilities of control station guided communication of robot swarms, we are cooperating with the Fraunhofer IVI within the Machine Automation Lab (MAL) project. The Fraunhofer IVI is researching technologies and solutions for open source control station systems, while we are working on transferring the concepts for use cases in the automation of mobile machines.

In the control station system, cooperative maneuver planning is implemented for collision-free guidence of multiple vehicles. In case of disturbances, the control station also adaptively plans new routes and processes for the swarm. The swarm robots (MaliBots) communicate with the control station via the helyOS interface, both to coordinate the process, and report back the vehicle status.

The TCG-4 is used for the communication and for the control and management of the robot. The control system interpolates the path from the nodes sent by the helyOS and supplies both position variables for control and path speed values for feedforward control. Localization is performed by means of high-precision RTK. The corresponding sensors are connected to the TCG-4 and provides the vehicle position and orientation. The software of the Malibot is modular. The individual modules communicate via DDS. This provides the flexibility for the modules to be moved or exchanged within an ECU network. It helps enable rapid prototyping and increases system flexibility.

![[Translate to English:] [Translate to English:]](/fileadmin/_processed_/f/4/csm_Logo_41f8d03cbf.jpg)